Deep Learning & How to Choose the Right Model

Deep Learning

Although the term deep learning is commonly heard, it is not well defined.

For our purposes, let’s let “deep” refer to any neural-network based model that contains more than a single “hidden layer”. This includes everything from Multi-Layer Perceptrons all the way up to convolutional neural networks or recurrent neural networks with hundreds of hidden layers.

- Deep Learning

- A subset of machine learning that uses artificial neural networks with many† layers to analyze and learn from complex data.

- † Many can be understood to mean “more than one”, but in practice it usually means significantly more than one.

Multi-Layer Perceptrons (MLPs)

This is the original “neural network”.

Useful if you have “traditional” (bag of variables) datasets and need a nonlinear model, if you have enough data.

Beware of making MLPs too “deep” - they can become hard to train.

Convolutional Neural Network (CNN)

- Based (loosely) on concepts taken from models of how the human visual cortex operates.

- Rely on convolutional operations to provide some translation invariance.

- Learn visual features in a hierarchical fashion.

- Also proven useful in non-vision applications.

![]()

- Convolutional Neural Networks https://anhreynolds.com/blogs/cnn.html

- Understanding Convolutions: https://towardsdatascience.com/intuitively-understanding-convolutions-for-deep-learning-1f6f42faee1

- Convolution Arithmetic: https://github.com/vdumoulin/conv_arithmetic

- 10 CNN Architectures: https://towardsdatascience.com/illustrated-10-cnn-architectures-95d78ace614d

Image from https://anhreynolds.com/blogs/cnn.html

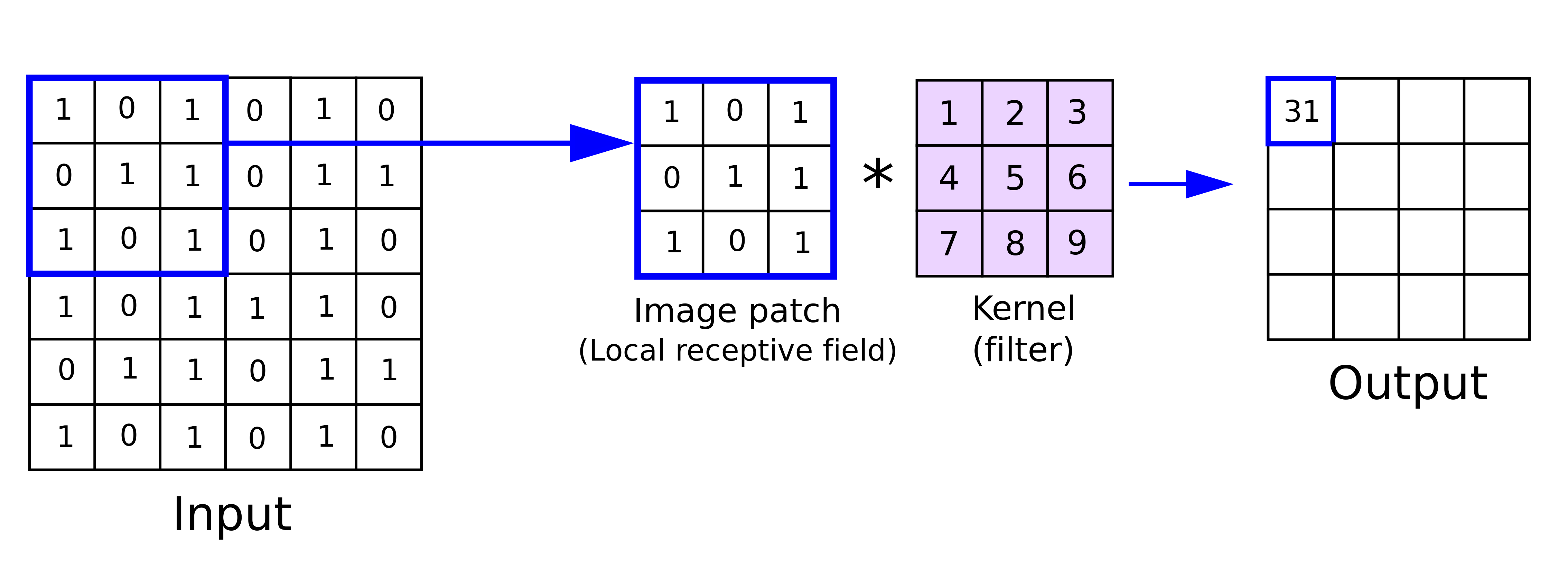

Convolution

Each \(N\times N\) patch in the Input is “compared” (via dot product) to the filter (or kernel) and the result creates a single pseudo-pixel in the Output.

Image from https://anhreynolds.com/blogs/cnn.html

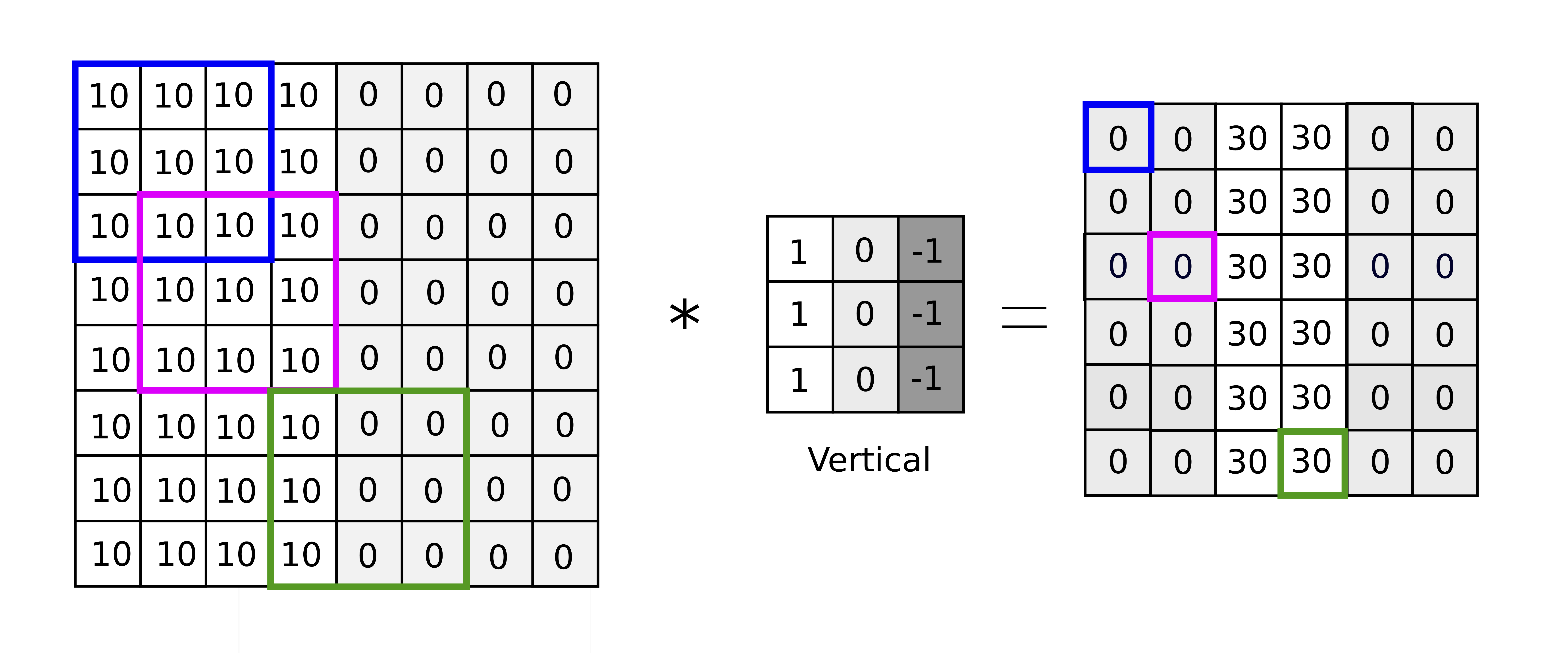

Convolution Filters : Edge detection

An example kernel that will provide vertical edge detection. Notice how it responds highly at the boundary between the “lighter” and “darker” pixels in the input.

In practice, we don’t hand-craft the filters—we let the network learn them. (In other words, the values in the filter are weights (or parameters) in the model.)

Image from https://anhreynolds.com/blogs/cnn.html

Recurrent Neural Network (RNN)

- Typically used for timeseries data and Natural Language Processing.

- The output for each new input also depends on previous input(s).

- Also work well when mixed with CNNs for visual tasks.

- Illustrated Guide to RNNs: https://towardsdatascience.com/illustrated-guide-to-recurrent-neural-networks-79e5eb8049c9

- Understanding RNNs and LSTMs: https://towardsdatascience.com/understanding-rnn-and-lstm-f7cdf6dfc14e

- RNN Effectiveness: https://karpathy.github.io/2015/05/21/rnn-effectiveness/

RNN Image: https://upload.wikimedia.org/wikipedia/commons/b/b5/Recurrent_neural_network_unfold.svg

LSTM Cell image: https://upload.wikimedia.org/wikipedia/commons/5/56/LSTM_cell.svg

Autoencoder

- Autoencoder is one of the few unsupervised deep learning models.

- It learns to reproduce its input as precisely as possible by first encoding it to a latent feature-space representation, then decoding that representation back to the original dimensionality.

- Can be used for compression, denoising, and more.

- Can be used for pre-training weights for “few shot learning”.

- What is AE used for?: https://towardsdatascience.com/auto-encoder-what-is-it-and-what-is-it-used-for-part-1-3e5c6f017726

- Autoencoders (Stanford): http://ufldl.stanford.edu/tutorial/unsupervised/Autoencoders/

- Autoencoders: https://www.jeremyjordan.me/autoencoders/

- LSTM Autoencoders: https://machinelearningmastery.com/lstm-autoencoders/

Autoencoder Image: https://upload.wikimedia.org/wikipedia/commons/2/23/Autoencoder-BodySketch.svg

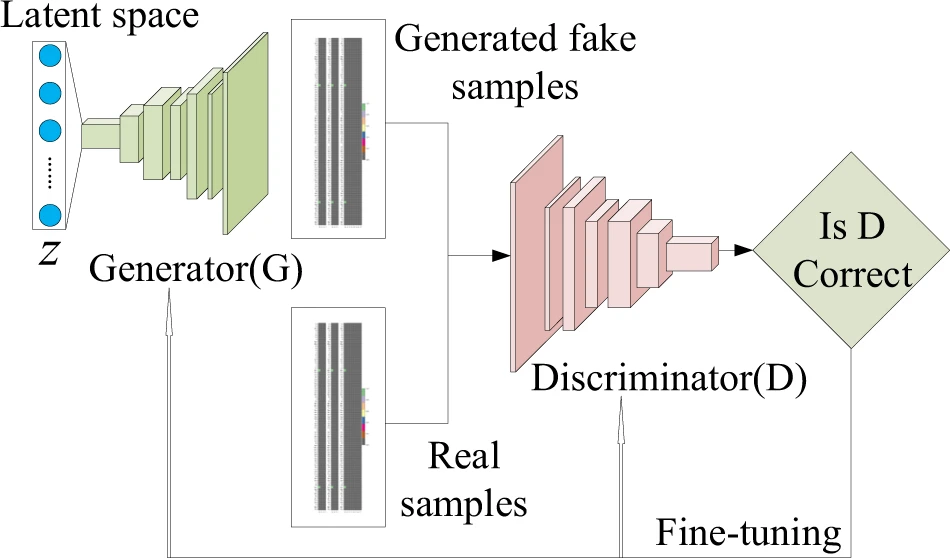

Generative Adversarial Network (GAN)

- Combines the concept of a discrimanative model with a generative model.

- Trains both models together - each tries to “fool” the other.

- End result is a pretty good generator and a pretty good discriminator.

Image: Dan, Y., Zhao, Y., Li, X. et al. Generative adversarial networks (GAN) based efficient sampling of chemical composition space for inverse design of inorganic materials. npj Comput Mater 6, 84 (2020). https://doi.org/10.1038/s41524-020-00352-0

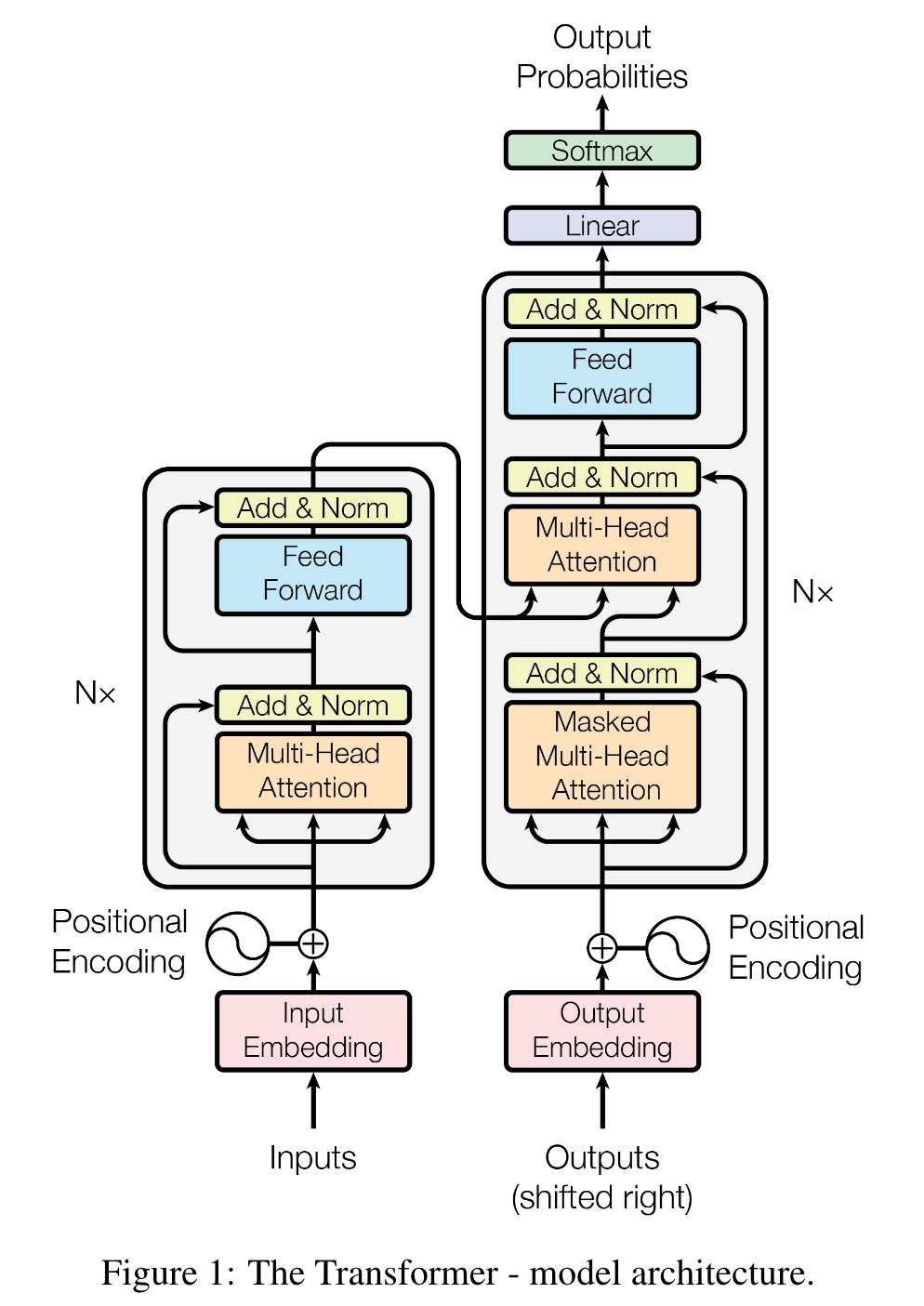

Transformer Networks

Transformer networks are a type of neural network architecture used in natural language processing (NLP) tasks such as machine translation and sentiment analysis.

The key feature of transformer networks is the self-attention mechanism, which allows the network to weigh different parts of the input sequence differently based on relevance. This is used instead of recurrent state (as used in a RNN) to model the time-series relationship.

Images: A. Vaswani et al., “Attention Is All You Need,” arXiv:1706.03762 [cs], Dec. 2017 [Online]. Available: http://arxiv.org/abs/1706.03762.

Transformer : Key Features

- Self-attention mechanism: allows the network to weigh different parts of the input sequence differently based on relevance

- No recurrent or convolutional layers: allows for parallel processing and faster training time

- Multi-head attention: allows the network to learn more complex relationships between different parts of the input sequence

- Advantages

- Improved performance over traditional RNN-based models

- Parallelizable and efficient

- Can handle longer input sequences

- Disadvantages

- Fully-connected design means high parameter cost (space and time complexity).

- Transformer Paper: https://arxiv.org/abs/1706.03762

- Transformer Paper explained: https://towardsdatascience.com/attention-is-all-you-need-discovering-the-transformer-paper-73e5ff5e0634

- How Transformers Work: https://towardsdatascience.com/transformers-141e32e69591

Modern Large Language Model (LLM) Architectures

LLM (Large Language Model) is a type of NLP model designed to generate text that is similar to human writing.

LLM: Key Features

- Pre-trained on large amounts of text data

- Fine-tuned on specific tasks, such as question answering or language generation

- Typically use transformer networks as the underlying architecture

- Advantages

- Can produce high-quality, human-like text

- Can be adapted to a variety of NLP tasks with minimal tweaking

- OpenAI’s GPT-4, one of the most advanced LLMs, has demonstrated impressive results on a wide range of language tasks.

- Disadvantages

- Known to “hallucinate” (give incorrect answers with high confidence).

- Legal issues surrounding training datasets and potential for copyright violation.

- … ??? (too early to tell)

Transfer Learning

- Deep learning models are difficult to train, and require massive labeled datasets.

- Many real-world tasks have similarities though…

Is identifying birds that different from identifying objects in an image? They are both visual tasks… They both require us to use similar parts of our vision system. It makes sense that a network trained for one task might be able to become quickly proficient at a different (but similar) task.

- Train a system on task A. Use the pre-learned weights \(W_A\) (except perhaps the top layer), train for additional epochs on a specialized task B. This “retraining” should take less time/examples than the original training.

- Heavily used in applied machine learning, such as biomedical imaging, agricultural applications, etc.

Few-Shot Learning

Labeled data is usually hard to get. (Correctly labeled data is even harder.)

We need techniques to train networks with fewer labeled examples.

Trick is to first train network to perform a task that can be automated, then final training requires less data.

Few-Shot Learning survey paper: https://arxiv.org/abs/1904.05046

Finding Existing Models

You can often use an existing model that can either apply directly to your task or can be fine-tuned through transfer learning to fit your task. Some places to look are listed below:

- Model Zoo: https://modelzoo.co/

- Keras Applications: https://keras.io/api/applications/

- PyTorch Hub: https://pytorch.org/hub/

- Hugging Face Models: https://huggingface.co/models

- Eleuther AI: https://www.eleuther.ai/releases

Choosing a Model by Task

This article takes a look at three kinds of machine learning tasks (Classification, Regression, and Clustering) and present some of the best known models for each.

https://elitedatascience.com/machine-learning-algorithms

Here is a similar article that looks at many more kinds of machine learning tasks (and of course the same ones as above as well).

https://www.dataquest.io/blog/top-10-machine-learning-algorithms-for-beginners/

Papers with Code maintains a repository of state-of-the art research papers and provides open-source implementations and evaluation metrics.

https://paperswithcode.com/sota

Learn More

Deep Learning Book (by Ian Goodfellow, Yoshua Bengio, and Aaron Courville) - a comprehensive textbook covering a wide range of deep learning topics. https://www.deeplearningbook.org/

Papers with Code - a website that aggregates recent research papers and provides open-source implementations and evaluation metrics available with each of them. https://paperswithcode.com

MIT Deep Learning Series - a collection of video lectures by prominent researchers, designed to give a broad overview of the field of machine learning and deep learning. https://deeplearning.mit.edu/

Coursera Deep Learning Specialization - a series of online courses providing a graduate-level introduction to deep learning. https://www.coursera.org/specializations/deep-learning

TensorFlow.org - a popular open-source platform for constructing and training machine learning models, including deep learning. https://www.tensorflow.org/

PyTorch.org - another popular open-source platform for constructing ML models. Probably more popular than Tensorflow among ML researchers at the moment. https://pytorch.org/

Deep Learning & How to Choose the Right Model

CS 4/5623 Fundamentals of Data Science